Exploring Consensus With Parallel Proposals: The Difference Between PBFT and BBCA-Chain

Authors: Shivaansh Kapoor, Dahlia Malkhi, Chrysoula Stathakopoulou, Maofan (Ted) Yin

Synopsis

Recent works [BBCA-Chain, Motorway, Cordial-Miners, Shoal, Mysticeti-C, Sailfish] debunk the belief that Block-DAG BFT Consensus protocols have to pay significant latency to achieve throughput scalability. They provide alternative ways for leveraging parallel transaction dissemination.

With BBCA-Chain and Motorway, we’ve returned to utilizing a causal communication transport with a thin “shim” as the fundamental backbone for consensus, rather than employing reliable broadcasts in parallel for proposal (block) dissemination. Have we come a full circle back to PBFT?

In this post, we underscore the similarities and differences between sequential and parallel BFT consensus by comparing two representative protocols. On one side, we put PBFT, the golden standard in BFT consensus for partial synchrony. On the other side, we compare BBCA-Chain, a BFT consensus protocol that embeds the Consensus protocol inside a transport framework that allows for parallelism, namely a DAG framework. BBCA-Chain is perhaps the closest to PBFT among such “Block-DAG” protocols. In both protocols, a standard leader-based consensus is employed for sequencing transactions. The difference distills to the following:

- In PBFT, a leader accumulates transactions directly into proposals. In BBCA-Chain, a leader utilizes the DAG to refer to a causal front of accumulated transactions.

- PBFT involves a sophisticated view change regime to ensure the leader is informed of all views where a proposal has not been committed. BBCA-Chain utilizes the DAG and embeds the view-change information as a causal front.

In the rest of this post, we start with a recap of PBFT, followed by an overview of BBCA-Chain. We then proceed to discuss their similarities and differences.

PBFT

Overview

Practical Byzantine Fault Tolerance, or PBFT for short, is considered to be the first known practical solution to the Byzantine Generals Problem.

PBFT is a view based consensus protocol designed for state machine replication. In each view, a specific leader node is designated, with the other nodes acting as replicas, and consensus on a block is achieved and executed through a three-phase process: pre-prepare, prepare, and commit. The purpose of the pre-prepare phase is for the leader to initiate a consensus round for a block with a unique sequence number. The prepare phase involves the replicas sending prepare messages to their peers, confirming their delivery of the block. Once a node receives a quorum of 2f+1 valid prepare messages, it constructs a prepare quorum certificate (QC) and multicasts a commit message. Upon receiving a quorum of commit messages, the node constructs a commit QC and locally commits the block.

Benign Scenario

As you can see from the lamport diagram above, in this benign scenario, there are no crashes, byzantine failures, or network partitions, so the protocol operates as expected and each node is able to locally commit B1 and B2 by the end of the two consecutive views. Notice that the construction of a prepare QC locks the nodes on the proposal and seals its intention to not accept any conflicting proposal unless it has a higher prepare QC. Now that we have taken a look at the benign case, let’s consider a case with faults.

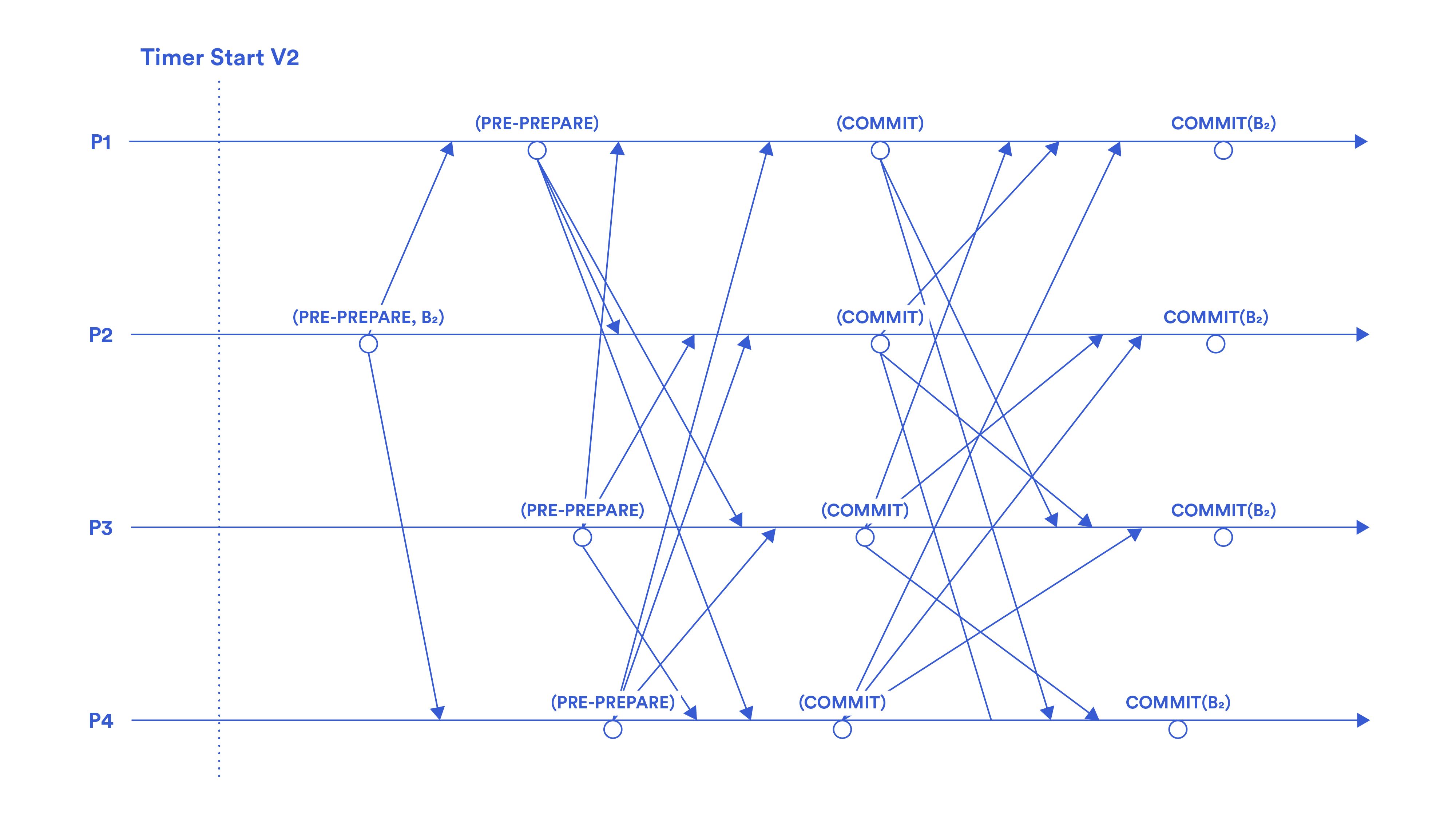

Adversarial Scenario

In the view 2 scenario following a benign view 1 depicted above, P1 and P2 receive a quorum of prepare messages but P3 and P4 do not. Since 2f+1 processes do not move on to the commit phase, no node is able to locally commit the block to their log. This invokes a view-change for view 3. During the view-change phase, P2 deviates from the protocol, and doesn’t send its highest quorum certificate (QC) to the new leader (P3). At this point, it is pivotal that the message highlighted in green is within the first 2f view-change messages received by P3 so that P3 adopts its prepare quorum for view 3. Since this is the case, the protocol proceeds with the three phases and the processes are able to locally commit the block to their logs.

It’s worth noting that the original PBFT paper allows a stable leader to have multiple outstanding proposals, each of which can finalize independently and potentially out of order. However, to employ PBFT for blockchain replication, we used a regime that rotates the leaders regularly, similar to the protocol described in this paper. As a result, because PBFT view-change finalizes pending proposals before proposing in the new view, this variant has no parallelism at the consensus level.

The core PBFT broadcast primitive can be utilized inside a transport framework that allows for parallelism, namely a DAG framework, but in order to do that, we need to allow peeking into the broadcast primitive. This is the foundation for BBCA-Chain.

BBCA-Chain

Overview

Block-DAG consensus protocols are designed to allow for parallel transmissions of blocks while guaranteeing a consistent causal ordering of block delivery through a directed acyclic graph. In Block-DAG consensus, agreement is reached on a chained backbone of blocks (proposed by leaders), and any blocks that are referenced by the backbone are transitively committed at each node by a deterministic graph traversal. Block-DAG consensus protocols, like Bullshark and Tusk, are “DAG-Riding” protocols. These protocols require no extra protocol messages to be used outside the DAG for the consensus protocol. The entire consensus logic is captured through the DAG structure: the position of a message in the DAG conveys the consensus logic it carries.

BBCA-Chain is a solution that aims to simplify “DAG-Riding” Block-DAG consensus in addition to reducing latency compared to other such protocols. In order to understand how BBCA-Chain achieves this, it is vital to understand how blocks are broadcasted in DAGs, namely, the Byzantine Consistent Broadcast (BCB) primitive.

The BCB primitive consists of a multi-step protocol which can be implemented with or without a public key infrastructure (PKI). The implementation using a PKI has two variants, all-to-all and linear, and the implementation without a PKI requires an all-to-all approach. In the context of this post, all references to BCB employ the all-to-all approach with a PKI. This implementation includes an initialization broadcast from one node to all other processes, followed by the recipient processes broadcasting a signed ECHO of the node’s request. Upon receiving 2f+1 echoes for the request, the request can be delivered by a process. A protocol like PBFT uses one BCB with an additional all-to-all round to facilitate commits (in the benign case). Now that we have an understanding of the BCB primitive, let’s take a closer look at the BBCA-Chain protocol.

BBCA-Chain introduces an ejectable variant of BCB called BBCA (Byzantine Broadcast with Complete-Adopt). BBCA allows BBCA-Chain to directly commit blocks, without layering additional votes at the DAG level. BBCA adds a probing API as a shim on top of BCB which looks at the progress of the current view’s BCB to check if a process delivered the proposed value. If it has, then the probing API returns an ADOPT, and if it hasn’t, the API returns a NO-ADOPT. A probe at 2f+1 processes returning NO-ADOPT indicates no honest processes have delivered (completed) the value in the view. Moreover, if a process delivers a value, at least 2f+1 processes will return an ADOPT when being probed. The probing API coupled with the DAG transport is used to facilitate view changes without the extra latency incurred by PBFT’s regime.

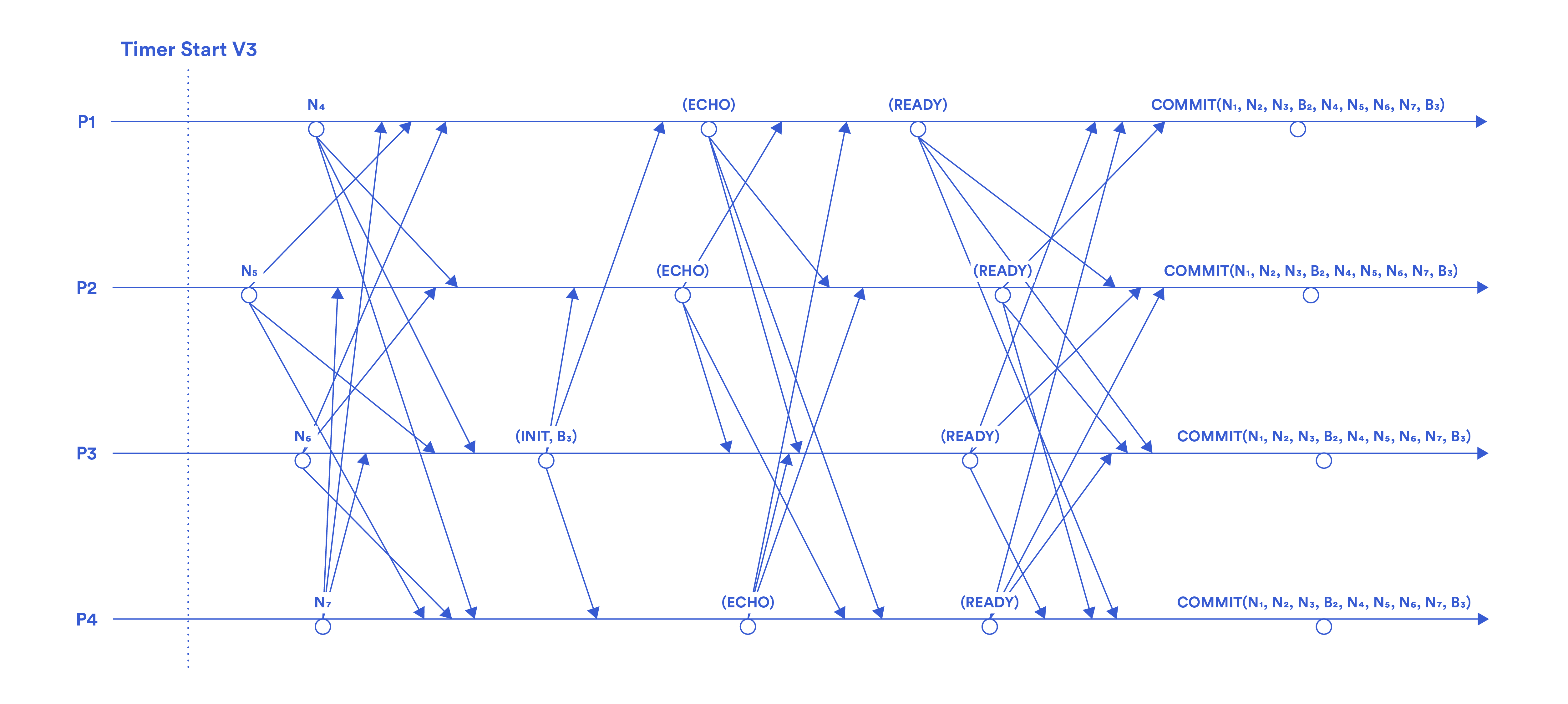

BBCA-Chain uses the BBCA primitive for broadcasting leader proposals and only a best effort broadcast (BEB) for non-leader proposals. Compared to the other Block-DAG protocols, such as those in the “DAG-Riding” family, which require all nodes to use a full-fledged BCB for all proposals, votes, and complaints, BBCA-Chain’s approach drastically reduces the commit latency and number of transmissions.

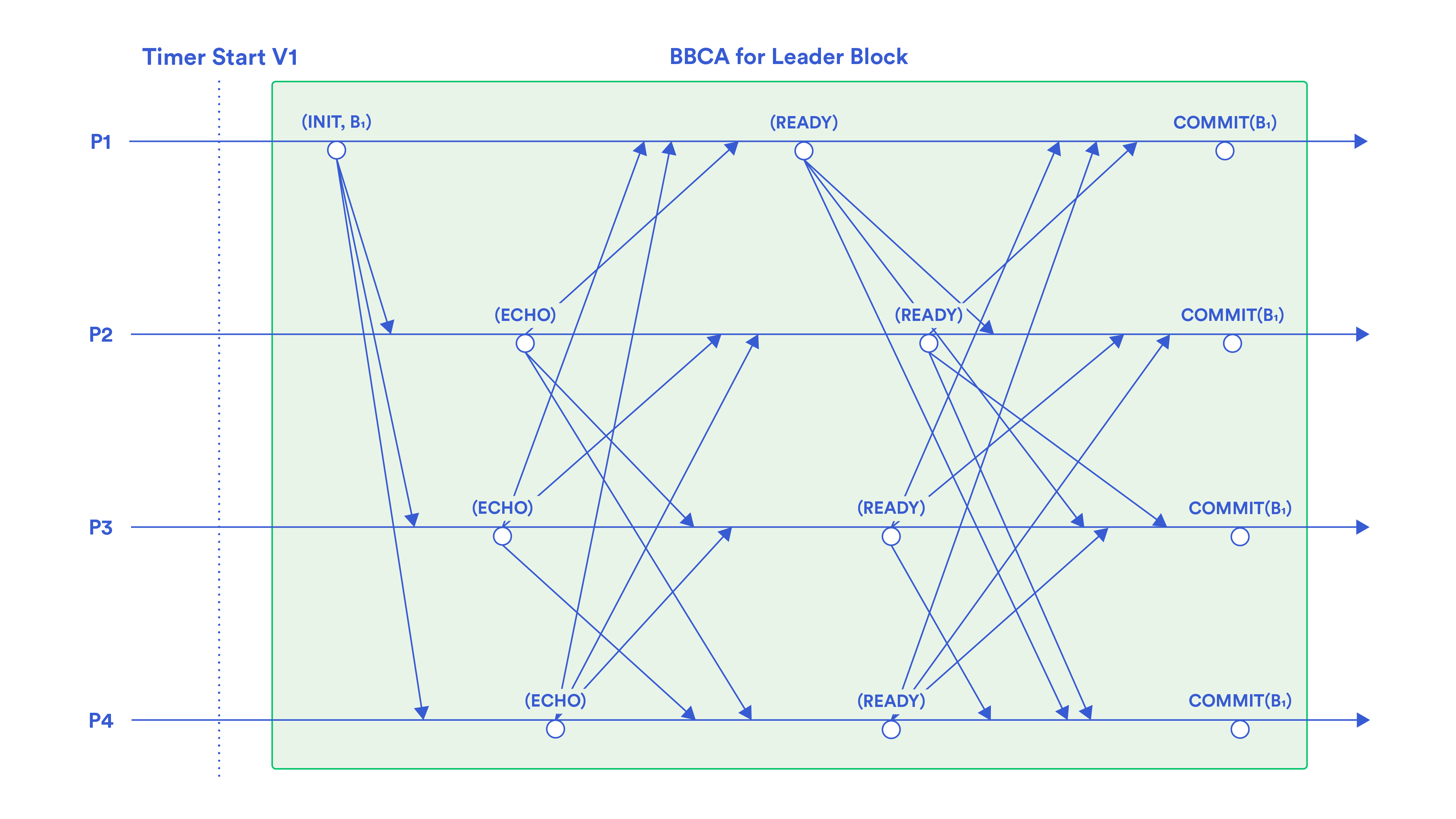

Benign Scenario

The benign scenario above shows the first two views of BBCA-Chain. At first glance, this scenario looks very similar to the benign scenario for PBFT. The only two differences are the non-leader blocks sent using BEB after a view ends and the commitment of multiple blocks in a single view facilitated by the DAG transport. Since non-leader blocks causally reference the last ready QC they received, the next leader can wait for these blocks to obtain the ready QC for the current view if it didn’t receive 2f+1 ready messages. Understanding that the echo and ready messages force honest nodes to deliver and commit the block respectively upon 2f+1 receptions is imperative to understanding how BBCA-Probe is able to handle voting without any additional blocks or layers on the DAG. We will look at this routine closely in the adversarial scenario.

Adversarial Scenario

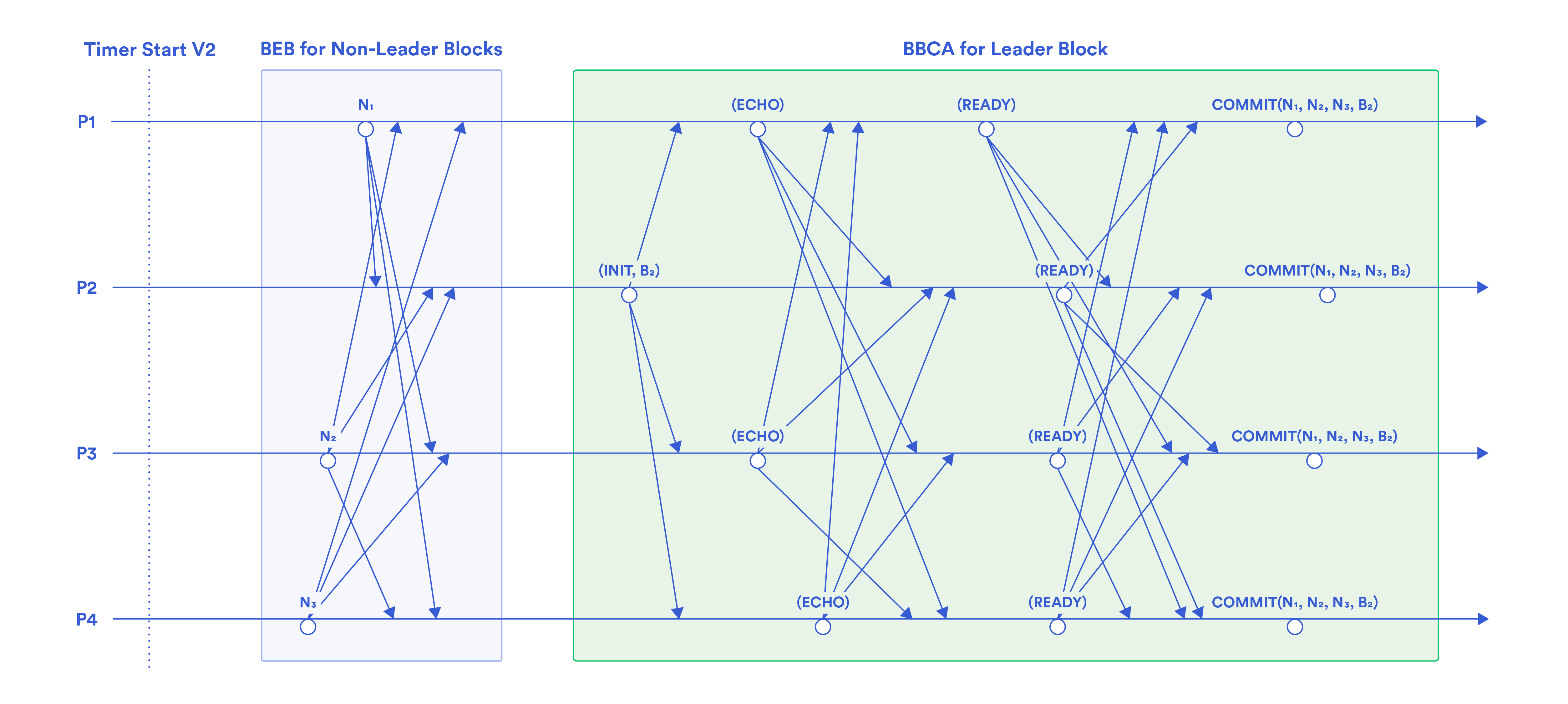

The adversarial scenario diagram above shows two consecutive views, succeeding view 1 from the benign scenario diagram. In view 2, only 2f nodes (P1 and P2) are able to broadcast ready messages and lock on the block, so there is no possibility of a commit for view 2. When the timer elapses at each process, they all run a BBCA-Probe to peek inside the current view’s BCB and check if they are in a locked state or not. Since P1 and P2 are locked on B2 BBCA-Probe returns ADOPT, and they attach the adopted block and its lock QC to their new view block message. Conversely, since P3 and P4 didn’t lock, BBCA-Probe returns NO-ADOPT, and they multicast their new view block with a NO-ADOPT. In the succeeding view, the leader (P3) uses the received new view blocks, and references the new view blocks. View 3 completes in a benign fashion, recursively committing the causally referenced blocks and current view’s block.

Notice that if P1 or P2 were byzantine, P3 could receive 2f+1 NO-ADOPTs and continue to view 3 without adopting view 2’s lock QC. Moreover, B2 and its predecessors wouldn’t be committed.

Comparison

Latency

Now that we have described PBFT and BBCA-Chain, let’s take a look at their similarities, differences, and their respective advantages and disadvantages.

Firstly, we already saw that the two protocols are similar in terms of the flow of transmissions across processes. Each view has three phases. The first phase involves the leader broadcasting a proposal, the second aims to create a lock QC, and the third aims to create a QC that enables local commitments. In the case that a lock QC can’t be constructed, PBFT nodes use their view-change regime to multicast a NIL QC, while BBCA-Chain nodes invoke their probing routine and multicast a NO-ADOPT with a non-leader block. Similarly, in the case that a lock QC exists but a commit QC doesn’t, PBFT nodes use a complex view-change regime to multicast the lock QC while BBCA-Chain nodes invoke their probing routine and multicast an ADOPT with a non-leader (BEB) block. Both of these approaches effectively do the same thing: ensure that the next leader has the highest QC for the current view and can base the next proposal on that knowledge. The key difference is that PBFT leaders use the view-change regime to form a proof of the highest lock QC they received from the first 2f+1 responses to their view change, to include in their next proposal. Conversely, a BBCA-Chain leader refers to the BEB blocks carrying ADOPT/NO-ADOPT for the previous view’s block in its next proposal. The QCs for leader blocks also serve as QCs for their causal predecessors: allowing BBCA-Chain to commit multiple blocks per view. Other than this, PBFT and BBCA-Chain are almost identical protocols at their cores.

Throughput

BBCA-Chain can achieve higher throughput than PBFT. Why is this the case? BBCA-Chain allows for parallel transmissions of blocks by different nodes, forming a DAG of blocks and their causal links. Whereas, in PBFT, transactions may disseminate in parallel as well, PBFT relies on a single node at a time, the leader, to reference an explicit ordering of transactions in their proposals. This key difference allows nodes in BBCA-Chain to commit an arbitrarily large backlog of blocks by just having knowledge of the latest causal front. During a view-change, PBFT requires nodes to re-broadcast all the uncommitted lock QCs. In contrast, BBCA-chain leverages causality to encode this information directly in the DAG. Thus, BBCA-Chain requires both reduced communication complexity and a less sophisticated view-change protocol.

The Shared Mempool Duplicates Conundrum

One might ask whether parallel proposing (e.g., BBCA-Chain) is strictly superior to sequential (e.g., PBFT)? The answer is no, it depends on the workload.

Let’s assume a system that has a constant backlog of transactions – there are always more transactions waiting to be included in a block than can fit in the current block at each process. This assumption is practical, as most popular public blockchains experience it constantly.

Let’s take a look at the transaction dissemination logic which determines the state of the mempool at each process. There are essentially three ways for a node to disseminate transactions it receives from clients. Once a node receives a transaction, it can (1) hold the transaction in its mempool until it becomes the leader, (2) send it to whom it believes is the next leader, or (3) propagate it to the other nodes through point-to-point channels or a gossip protocol. The first option has a linear latency for transaction inclusion, in terms of the number of nodes, and results in private mempools at each process. The second and third options have an ostensibly faster inclusion time, but result in shared mempools.

Furthermore, for a protocol like PBFT, all approaches are viable since there is a single block proposed per view, and a shared mempool may potentially have low(er) latency. However, for Block-DAG protocols like BBCA-Chain that support parallel proposals, employing a shared mempool scheme requires handling duplicates.

Since nodes are incentivized to propose blocks with the highest fee transactions, the shared mempool approach would have all nodes prioritizing the same transactions, virtually guaranteeing conflicts. If conflicts are endemic using a shared mempool, BBCA-Chain may result in more transmissions and computation required at each process without providing higher throughput, if at all, compared to PBFT.

What can be proposed in parallel?

An important aspect of parallel proposing which is often overlooked is whether and how parallel blocks can be proposed in parallel in practice.

In many cases, parallel construction of blocks does not make sense. In particular, in blockchains that support general-purpose smart contract chains, e.g. Ethereum, proposers construct blocks only after learning the latest, committed state. These systems opt for blocks to be produced by a single leader assigned to a particular consensus slot. Leaders may further delegate the task to block-builders, adhering to the proposer-builder-separation regime, who need to operate over the latest committed state in order to maximize their profit.

In other cases, e.g., some blockchains like COTI, Sui, Linera, and other payment-focused platforms, parallel transactions are the norm, hence parallel block proposing is possible. It is worth remarking that the data model in these blockchains is different from vanilla smart contract, e.g., Sui has “owned” objects which offer low latency and quick finality by bypassing consensus, but they are restricted to access by their owners and may complicate multi-party transactions.

All in all, the choice of what to propose in parallel, if at all, is specific to the application and the methodology for managing the system’s state.

Bibliography

- Miguel Castro and Barbara Liskov. Practical Byzantine Fault Tolerance, pmg.csail.mit.edu/papers/osdi99.pdf

- Giuliana Santos Veronese, Miguel Correia, Alysson Neves Bessani, Lau Cheuk Lung. Spin One’s Wheels? Byzantine Fault Tolerance with a Spinning Primary | IEEE Conference Publication | IEEE Xplore, 9 Oct. 2009, ieeexplore.ieee.org/document/5283369

- Alexander Spiegelman, Neil Giridharan, Alberto Sonnino, Lefteris Kokoris-Kogias. “Bullshark: Dag BFT Protocols Made Practical.” arXiv.Org, 7 Sept. 2022, arxiv.org/abs/2201.05677

- George Danezis, Eleftherios Kokoris Kogias, Alberto Sonnino, Alexander Spiegelman. “Narwhal and Tusk: A Dag-Based Mempool and Efficient BFT Consensus.” arXiv.Org, 16 Mar. 2022, arxiv.org/abs/2105.11827

- Dahlia Malkhi. “BFT on a Dag.” Chainlink Blog, 23 Sept. 2022, blog.chain.link/bft-on-a-dag

- Idit Keidar, Oded Naor, Ouri Poupko, Ehud Shapiro. “Cordial Miners: Fast and Efficient Consensus for Every Eventuality.” arXiv.Org, 22 Sept. 2023, arxiv.org/abs/2205.09174

- Dahlia Malkhi, Chrysoula Stathakopoulou, Maofan Yin. “BBCA-Chain: One-Message, Low Latency BFT Consensus on a DAG.” FC’24, 10 Oct. 2023, fc24.ifca.ai/preproceedings/47.pdf

- Alexander Spiegelman, Balaji Arun, Rati Gelashvili, Zekun Li. “Shoal: Improving Dag-BFT Latency and Robustness.” arXiv.Org, 7 July 2023, arxiv.org/abs/2306.03058

- Kushal Babel, Andrey Chursin, George Danezis, Anastasios Kichidis, Lefteris Kokoris-Kogias, Arun Koshy, Alberto Sonnino, Mingwei Tian. “Mysticeti: Reaching the Limits of Latency with Uncertified Dags.” arXiv.Org, 30 Apr. 2024, arxiv.org/abs/2310.14821

- Neil Giridharan, Florian Suri-Payer, Ittai Abraham, Lorenzo Alvisi, Natacha Crooks. “Motorway: Seamless High Speed BFT.” arXiv.Org, 18 Jan. 2024, arxiv.org/abs/2401.10369

- Nibesh Shrestha, Rohan Shrothrium, Aniket Kate, Kartik Nayak. “Sailfish: Towards Improving Latency of DAG-based BFT”. eprint.iacr.org, 20 March 2024. https://eprint.iacr.org/2024/472